Given what we know about the color of the points, how can we evaluate how good (or bad) are the predicted probabilities? This is the whole purpose of the loss function! It should return high values for bad predictions and low values for good predictions.įor a binary classification like our example, the typical loss function is the binary cross-entropy / log loss. This function implements cross entropy loss used for logistic regression in the form required by EmpiricalRiskMinimizationDP.CMS. If we fit a model to perform this classification, it will predict a probability of being green to each one of our points. In this setting, green points belong to the positive class ( YES, they are green), while red points belong to the negative class ( NO, they are not green).

Since this is a binary classification, we can also pose this problem as: “ is the point green” or, even better, “ what is the probability of the point being green”? Ideally, green points would have a probability of 1.0 (of being green), while red points would have a probability of 0.0 (of being green).

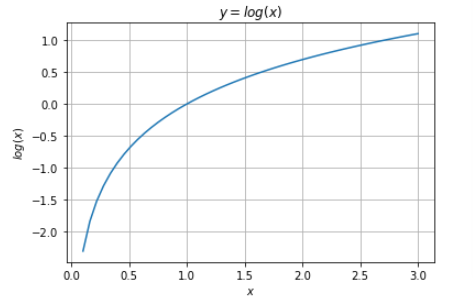

As mentioned above, in order to be a probability vector, p_1 + … + p_ C = 1 and p_j ≥ 0 for all j.So, our classification problem is quite straightforward: given our feature x, we need to predict its label: red or green. In general, the output of a ML model ( logits) can not be interpreted as probabilities across different outcomes, but it’s rather just a tuple of floating-point numbers. Turning the Model Output into a Probability Vector This means that the sum logP reduces to a single element n_m log p_m where m is the index of the positive class. a vector which elements are all 0’s except for the one at the index corresponding to the positive class. Some readers may have recognized this function: it’s usually called cross-entropy because of its connection with a quantity known from information theory to encode how much memory one needs e.g. Notably, the true labels are often represented by a one-hot encoding, i.e. In short, we will optimize the parameters of our model to minimize the cross-entropy function define above, where the outputs correspond to the p_j and the true labels to the n_j. Some readers may have recognized this function: it’s usually called cross-entropy because of its connection with a quantity known from information theory to encode how much memory one needs e.g. Log likelihood of a multinomial probability distribution, up to a constant that can be reabsorbed in the definition of the optimization problem. Ignoring for the sake of simplicity a normalization factor, this is what it looks like: When we extend the trials to C > 2 classes, the corresponding probability distribution is called multinomial.

#CROSS ENTROPY LOSS FUNCTION TRIAL#

in each independent trial class j is the correct one with probability p_j. of the inverse one-hot vector and the symmetric cross-entropy function. If that’s the case, well, you are in luck! Each possible outcome is described by what is called a binomial probability distribution, i.e. There are three steps to transform cross-entropy loss to inversed one-hot loss.

It is useful when training a classification problem with C classes. For each training example i, we have a ground truth label ( t_i). CrossEntropyLoss class torch.nn.CrossEntropyLoss(weightNone, sizeaverageNone, ignoreindex- 100, reduceNone, reduction'mean', labelsmoothing0.0) source This criterion computes the cross entropy loss between input and target. the picture of a pet) can be uniquely assigned to one among C ≥ 2 possible discrete categories ( e.g. Usually, we are in a situation where each item belonging to a system ( e.g. This is particularly useful when you have an unbalanced training set. If provided, the optional argument weight should be a 1D Tensor assigning weight to each of the classes. To begin with, we need to define a statistical framework that describes our problem. This criterion computes the cross entropy loss between input and target. From the Multinomial Probability Distribution to Cross-Entropy

0 kommentar(er)

0 kommentar(er)